Introduction

Today, we're thrilled to introduce ClipTagger-12B: a powerhouse 12B-parameter VLM that crushes Claude 4 Sonnet on video frame captioning while costing 17x less.

Developed through a collaboration between Inference.net and Grass, this open-source model represents a new class of specialized AI: workhorse models built for production workloads at internet scale.

The Problem: Video Understanding is Too Expensive

Every day, billions of video frames need to be processed for search, moderation, accessibility, and analytics. But the economics don't work. With frontier models charging $3-15 per thousand output tokens, even modest video processing becomes prohibitively expensive.

Consider a media company processing 100,000 video frames daily. Using GPT-4.1, this would cost over $186,000 annually. For a platform processing millions of frames, costs quickly reach millions of dollars. These economics have kept advanced video understanding limited to only the largest technology companies.

ClipTagger-12B: A Workhorse Model for Production

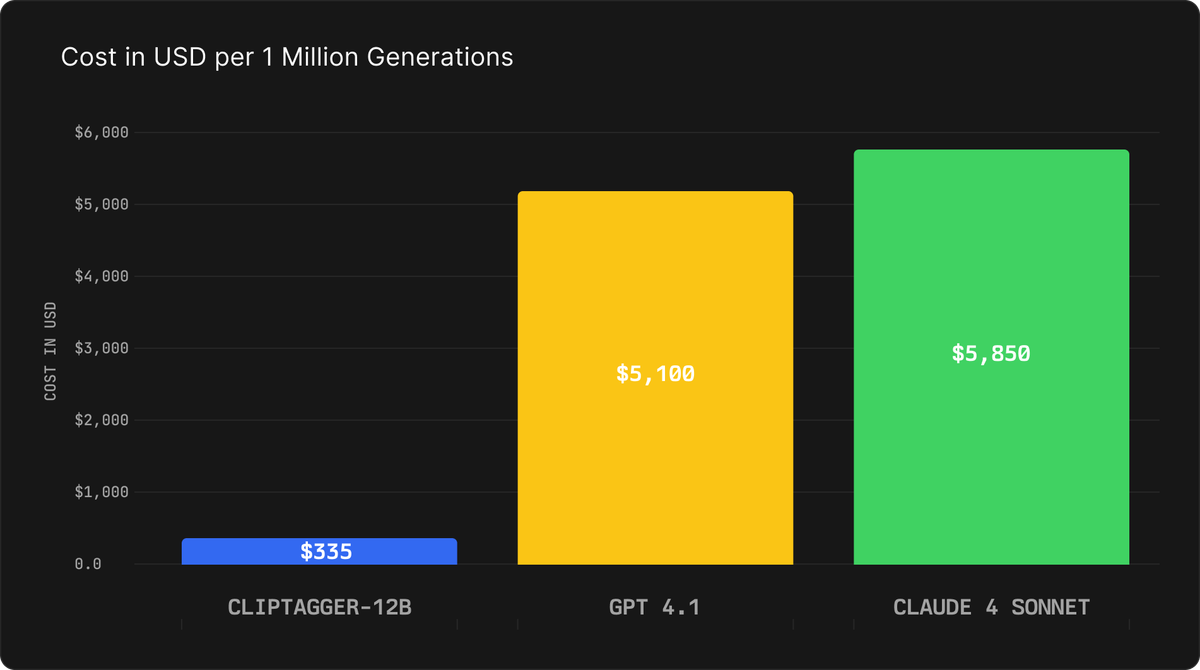

ClipTagger-12B solves this problem. At $0.30 per million input tokens and $0.50 per million output tokens, it delivers a 15x cost reduction compared to GPT-4.1 and 17x compared to Claude 4 Sonnet. For typical usage of 700 input tokens and 250 output tokens per frame, this equals $335 per million generations versus $5,100 for GPT-4.1 or $5,850 for Claude 4 Sonnet.

ClipTagger-12B isn't just another research model. It's a workhorse designed specifically for the demands of production video processing. The model generates structured JSON outputs for every video frame, making it ideal for:

Building searchable video databases at massive scale. The structured JSON output enables precise search capabilities. Natural language queries like "outdoor cooking tutorials with professional equipment" can match against specific fields in the JSON, returning results based on actual visual content rather than just metadata or titles.

Automating content moderation across platforms. The structured format allows moderation rules to be expressed as database queries. Platforms can flag content based on specific combinations of detected objects, actions, and environments, enabling more nuanced moderation policies that reduce false positives.

Creating accessibility tools with detailed scene descriptions. The comprehensive JSON output provides rich detail about objects, actions, and environments in each frame, making video content more accessible to visually impaired users through detailed audio descriptions.

Tracking brand visibility and ad verification. Advertisers can verify their ads appeared as contracted with concrete proof. Brands are detected in the logos field, products identified in objects, enabling automated tracking of screen time and context for each appearance.

Extracting insights from video libraries. Media companies can find all footage with certain objects, scenes, track production style trends, or identify reusable segments without expensive ML pipelines.

Performance That Matches Frontier Models

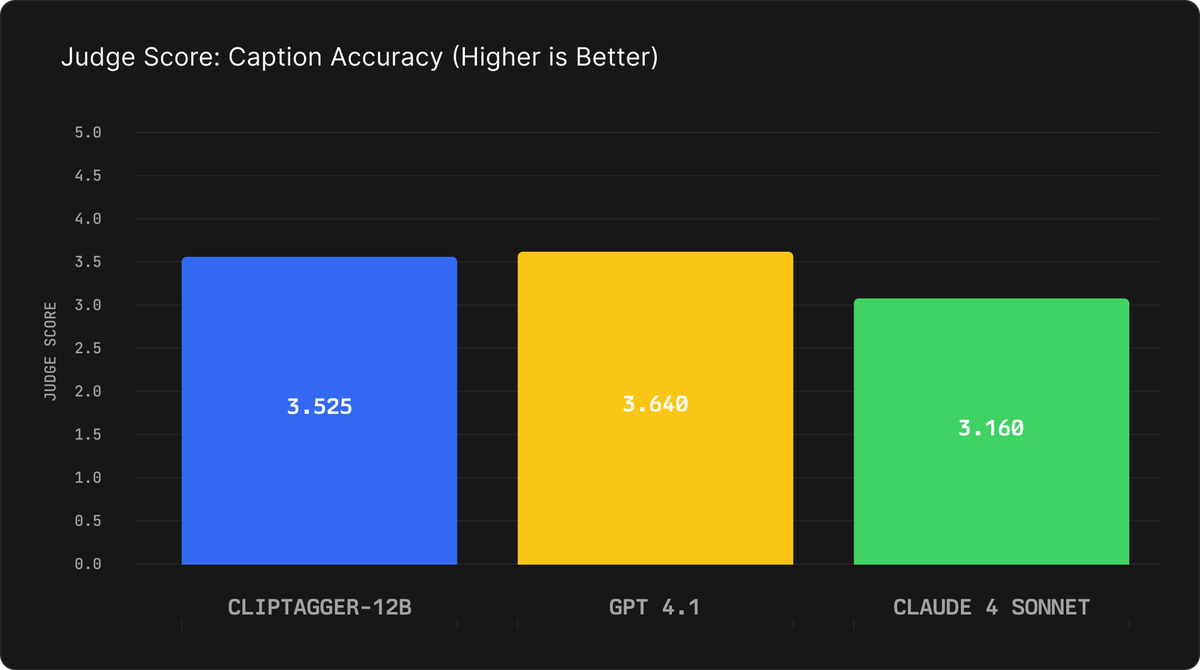

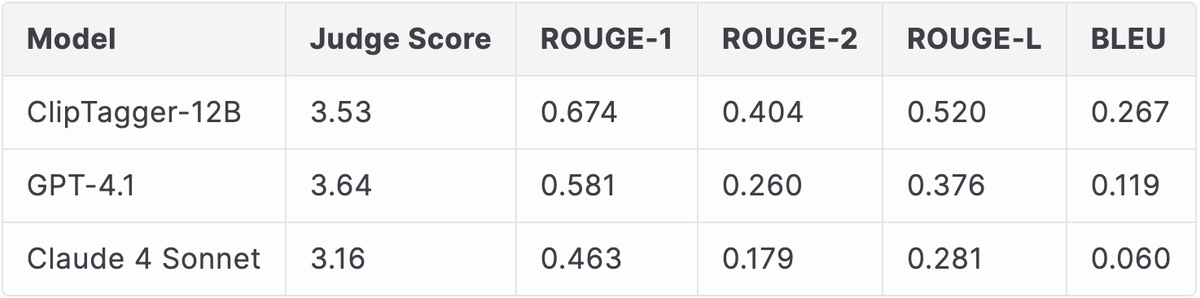

ClipTagger-12B achieves quality on par with GPT-4.1 while significantly outperforming Claude 4 Sonnet. Using Gemini-2.5-Pro as an independent judge to evaluate caption quality, the model scores 3.53 compared to 3.64 for GPT-4.1 and 3.16 for Claude 4 Sonnet.

When measured against the teacher model's outputs, ClipTagger-12B demonstrates strong alignment across all metrics:

The ROUGE and BLEU scores measure alignment with the teacher model's annotations, showing that ClipTagger-12B successfully captured the teacher's capabilities through distillation. The model achieves 67.4% ROUGE-1, 40.4% ROUGE-2, 52.0% ROUGE-L, and 26.7% BLEU, indicating that our distillation process effectively transferred the teacher model's knowledge into the smaller, more efficient ClipTagger-12B.

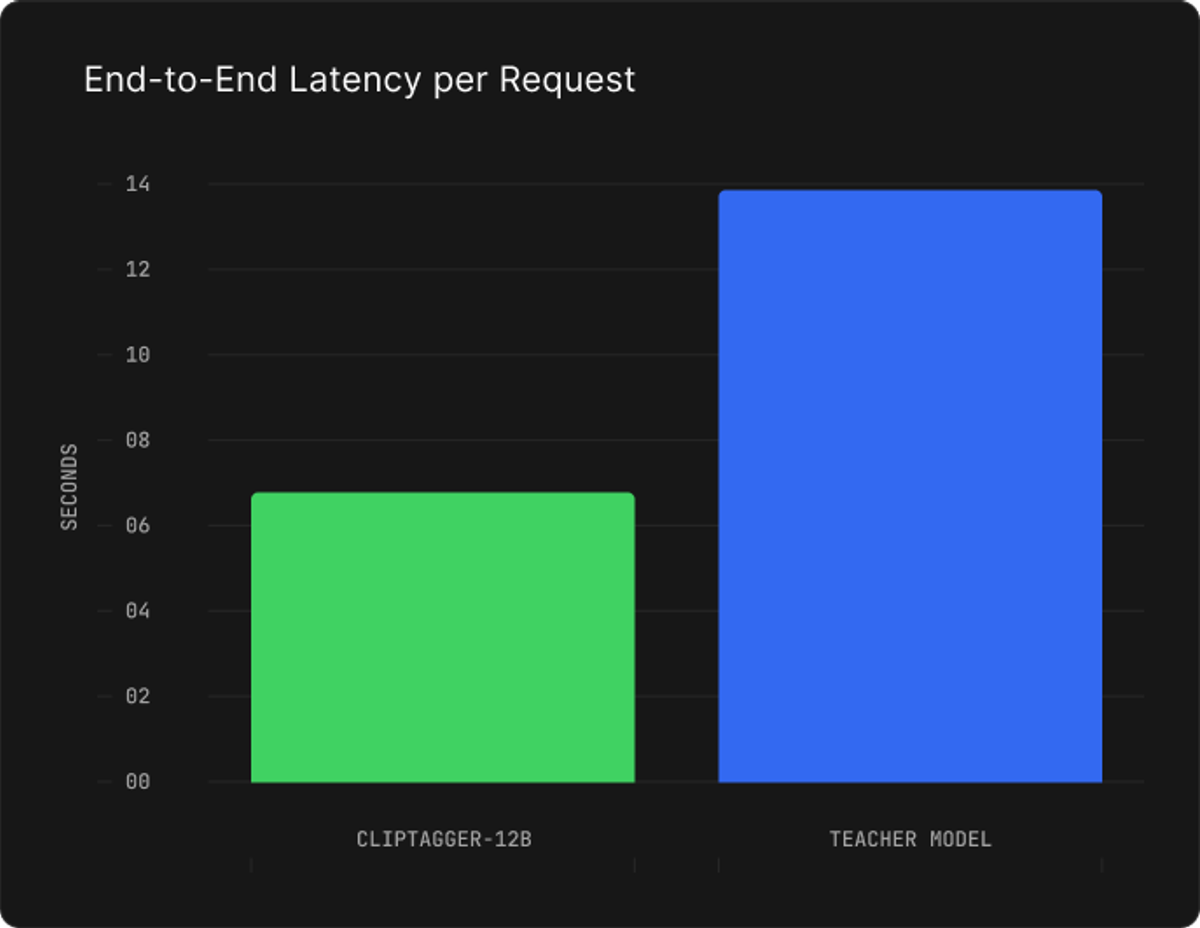

Beyond quality metrics, ClipTagger-12B delivers significantly faster inference. The model processes frames in approximately 6.5 seconds end-to-end, compared to 13.5 seconds for the teacher model, enabling high-throughput video processing applications:

Structured Output Built for Production Systems

ClipTagger-12B outputs structured JSON with a consistent schema for every frame. Given a nature scene with a wooden boardwalk through grassland it outputs:

{

"description": "A wooden boardwalk path extends from the foreground into the distance, cutting through a field of tall, vibrant green grass. The path is flanked on both sides by the dense grass. In the background, a line of trees is visible on the horizon under a blue sky with scattered white clouds.",

"objects": [

"Wooden boardwalk",

"Tall green grass",

"Blue sky",

"White clouds",

"Trees"

],

"actions": [],

"environment": "An outdoor, natural landscape, likely a marsh or wetland, on a clear day. The scene is characterized by a wooden boardwalk, lush green vegetation, and a bright blue sky with wispy clouds.",

"content_type": "real-world footage",

"specific_style": "landscape photography",

"production_quality": "professional photography",

"summary": "A wooden boardwalk path winds through a lush green field under a bright blue sky with scattered clouds.",

"logos": []

}This structure turns unstructured video into queryable data. Each field serves a specific purpose: objects enables product detection, actions captures what's happening, environment provides context, and production_quality helps filter content types. The schema was refined through months of iteration with Wynd to maximize utility while maintaining simplicity.

Technical Implementation

ClipTagger-12B is based on the Gemma-12B architecture, trained through knowledge distillation from a frontier vision-language model. We used one million carefully curated video frames from Grass's extensive video catalog for dataset creation.

The distillation process captured the teacher model's performance while reducing inference costs by 95%. We were able to achieve similar training results with both 12B and 27B variants of this distilled model, however the 12B model was an easy choice for its higher throughput and lower cost.

ClipTagger-12B is quantized to FP8 precision and shows no measurable quality loss vs BF16 based on judge scores. It’s specifically tuned for RTX 40-series and H100 GPUs, leveraging native FP8 support for high-throughput inference, and runs efficiently on a single 80 GB GPU.

Proven at Billion-Frame Scale

ClipTagger-12B has been tested and proven on billion-scale video libraries through our partnership with Wynd Labs. The model scales linearly with constant per-frame costs of $0.000335, whether processing thousands or billions of frames. This predictable pricing enables accurate budget planning even for the largest video libraries.

For high-throughput workloads, we support batch processing with webhook callbacks. Submit thousands of frames for asynchronous processing and receive results when complete. Enterprise customers can deploy on-premise or in their cloud with our containerized packages.

If you're planning to use ClipTagger-12B for large-scale workloads or search applications, contact us directly at partners@inference.net. We'll work with you to optimize deployment and ensure success at scale.

Custom Model Development

For organizations with unique requirements, we offer Custom LLM Training using the same distillation infrastructure that created ClipTagger-12B. Our process delivers:

- 50% faster generations with reduced latency

- Up to 95% cost reduction versus closed-source models

- Full model ownership with no vendor lock-in

- 30-day development from data to production deployment

Our team handles everything: data curation, model training, GPU procurement, benchmarking, and deployment or deployment support. Each model is optimized for the specific task, delivering frontier performance at a fraction of the cost.

Schedule a 15-minute consultation to discuss your use case. We'll quickly assess whether a custom model makes sense for your needs.

Availability

ClipTagger-12B is available today under Apache 2.0 license and is available for inference through our serverless API:

- API Access: Get $25 in free credits at inference.net/register

- Documentation: docs.inference.net/use-cases/video-understanding

- Model: Available on Hugging Face

The Shift to Task-Specific Models

ClipTagger-12B represents a broader trend in production AI. As frontier models grow larger and more expensive, the gap between their capabilities and practical deployment widens. Organizations need models that do specific tasks exceptionally well at sustainable costs.

This is where workhorse models excel. Rather than paying for general intelligence when you need specific capabilities, you deploy models optimized for your exact use case. The same distillation pipeline that created ClipTagger-12B can produce specialized models for medical imaging, industrial inspection, security monitoring, or any domain requiring visual understanding.

ClipTagger-12B was developed by Inference.net in collaboration with Grass. Special thanks to the Grass team for providing access to their video catalog and partnership throughout development. For custom model development, contact support@inference.net.

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.